6. What is Latency?

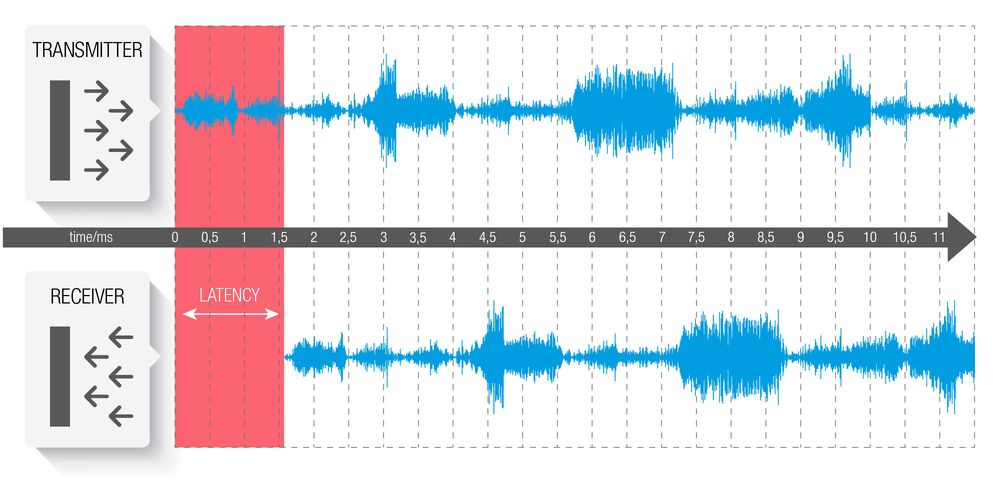

It takes a certain amount of time for audio data to travel from the outside world, over a bus, and into the computer. There's the sampling and conversion to whole values, the communication with the driver, the negotiation with the operating system, and finally the processing by the software all of this takes time. We call this time delay 'latency'.

Latency is further complicated by the fact that the data flowing into the computer over the bus must be regulated to prevent loss or disorder. It needs a built-in block of thinking time to ensure a constant stream of data this is called a 'buffer'. The buffer fills with data, and gives the computer the breathing space to organise the stream properly and prevent any gaps or loss of data. A larger buffer will increase the amount of data the computer can successfully cope with, but this in turn increases the latency, so in order to reduce the latency, the buffer must be made smaller, which in turn makes the computer work harder to maintain the stream.

There's a balance going on between the buffers and the processor, between the number of possible audio channels and speed of response. Make the buffers too small and the audio will pop and click, as the processor tries frantically to cope with the speed of the data flowing through. Make the buffers too large and the responsiveness of the software appears sluggish and unintuitive, and delays can be heard when monitoring.

When is Latency a Problem?

Delays are not always a problem, and in fact most people don't really experience the effects of latency with modern systems. Few people would notice a 3ms delay, which is a common high performance setting, and to put it in context it's the sort of delay you'd experience by standing six feet from your guitar amp. Once the delay gets up past 10ms then the brain starts to notice the lag.

There are a couple of situations where latency can be a problem. The first is when monitoring audio through your software. For instance, you might be recording vocals with a singer who wants to monitor their voice with reverb on, so you've not just got the latency of your system, you've also got the added processing time of the reverb plug-in. If the latency is too high, then their voice will be out of sync with what they hear in the headphones highly off-putting. Latency can also be a problem when using virtual instruments - software synthesisers - that can be played live from a keyboard. If the latency is too high then there'll be a delay between striking a key and hearing a sound, and that can make playing impossible.

Finding the balance between latency and the processing power of your computer is the key to a stable, usable studio.

- Unbalanced, balanced or auto-balanced?

- What is stacking?

- Which level, -10dB or +4dB?

- What is Phantom Powering?

- Whats the difference between Coaxial and Optical S/PDIF?

- Does 96kHz sound better?

- Is the quality of converters important?

- How high should the signal-to-noise ratio be?

All single channel electrical signals are unbalanced and travel down a single piece of wire (plus ground). A balanced line uses two wires, with the original signal going down one and a mirror image going down the other which has been phase reversed. When the phase is reversed back at the other end and the two signals are combined, any interference picked up in the cable is cancelled out. Auto-balanced cables switch between being balanced or unbalanced depending on what’s attached.

Combining two or more interfaces of the same make and type to expand the number of connections available. Drivers must support this in order for it to work, and not all do. Audio interfaces from different manufacturers cannot be stacked in this fashion.

Ultimately it depends on the equipment. +4dB is “hotter” and so has more level than -10dB, and on output this could cause distortion through the amplifier. On the other hand –10dB can be too quiet and waste some of the resolution of your dynamic processors. Most interfaces can be switched between the two, so that you can find the idea level for your particular environment.

This is usually 48V of power that is required to run a condenser microphone. It is sent back down the same cable as the audio signal arrives on, requiring no separate connection, hence ‘phantom’.

Coax cables are highly insulated electrical cables, where the signal line runs down the centre of the shielding – antenna cables are coaxial. Optical cables use light that is transferred down a fibre optic cable. Both cables are suitable for digital signal transmissions, although optical is less prone to interference from electrical fields. Coax and optical are not directly compatible with each other and require a conversion box to switch between the two formats.

Although in theory we cannot hear the extra information that is recorded at 96kHz or even higher, it often does sound better, primarily because it allows ‘anti-aliasing’ filters to be placed at such a high frequency that they have far less detrimental effects on the audio spectrum. There are many other factors that affect audio quality though, so it can only be said as a rule that 96kHz generally sounds a little better than 48kHz on the same audio interface. 96kHz also generates double the amount of data, and so requires the computer to work much harder to cope with the information, and needs double the disk space to store it.

Yes, but as with many things, the law of diminishing returns comes into play – although as a general rule you will get better quality if you pay more, paying double doesn’t double the quality. Unless you have highly trained ears, you may find it hard to detect any difference as you go further and further up the price range.

Naturally, in principle, as high as possible. However, the higher you go, the harder it becomes to notice the difference. The maximum SNR for CD is 96dB and that’s pretty quiet. To make use of any SNR above 96dB you’d need to be working in 24bit resolution, which is one of the advantages of working at resolutions higher than 16bit.

Kontakt

Nejlepší produkty

Nabídky

-

USB audio rozhraní

-

Firewire audio rozhraní

-

Thunderbolt rozhraní

-

DSP audio systémy

-

Ethernetová rozhraní